In the era of digital transformation, where user traffic, data, and processing demands are constantly growing, Scalability has become a vital factor for every technological system. No matter how well an application or platform is designed, without the proper ability to scale, it will eventually hit limits regarding performance, cost, and user experience. The following article will help you clearly understand the essence of Scalability, deployment methods, influencing factors, as well as the benefits and challenges of applying scalability in IT systems.

What is Scalability?

Scalability is the ability of a system, application, or technological infrastructure to expand its operational scale to meet increasing demands without compromising performance, stability, or user experience. In other words, a highly scalable system can handle more users, more data, or larger workloads simply by adding or adjusting resources.

Scalability is not just about “adding servers”; it is closely linked to system architecture, data organization, resource allocation methods, and long-term operational strategies. In practice, Scalability is often considered alongside performance, availability, and reliability.

Why is Scalability Important?

The importance of Scalability lies not only in technical aspects but also directly impacts profitability and brand reputation. In a fiercely competitive market, customers will not patiently wait for a slow-loading website or an application that frequently suffers from connection errors due to overloading.

Possessing a scalable system helps businesses:

- Withstand market fluctuations: Stay ready for major promotions (Flash Sales) or media events that cause traffic spikes.

- Optimize costs: You only pay for what you actually use instead of maintaining a massive infrastructure that remains idle most of the time.

- Improve user experience: Maintain fast and stable response speeds regardless of whether there are 1,000 or 1,000,000 concurrent users.

Scalability Implementation Methods

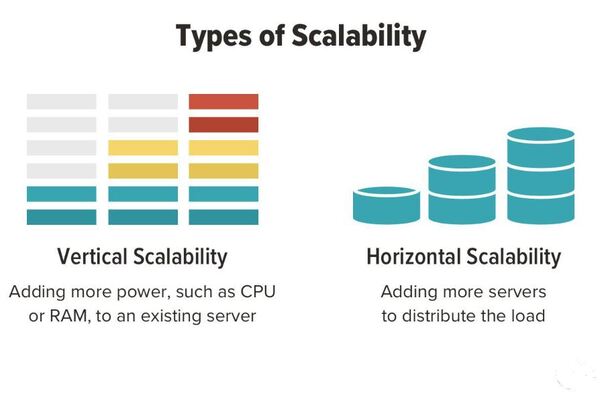

To achieve scalability goals, engineers typically apply two core strategies. Depending on the budget, data structure, and long-term objectives, businesses can choose one or a combination of both following methods:

Vertical Scaling

Vertical Scaling, or “Scaling Up,” is the process of increasing the power of an existing server by adding hardware resources such as CPU, RAM, or storage capacity.

- Pros: Easy to implement; does not require significant changes to the software source code.

- Cons: Always hits a hardware “ceiling” (you cannot add infinite RAM to a single server). Additionally, it often causes downtime during upgrades and creates a “Single Point of Failure” risk (if that single server fails, the entire system collapses).

Horizontal Scaling

Horizontal Scaling, or “Scaling Out,” involves adding more servers to the existing system to share the workload. Instead of one extremely powerful server, you have a network of multiple servers operating in parallel.

- Pros: Offers nearly unlimited scalability. If one server fails, others continue to operate normally, ensuring high availability.

- Cons: Requires a more complex software architecture, necessitating load balancing mechanisms and data synchronization between nodes.

Factors Influencing Scalability

Simply adding more servers does not automatically make a system stronger. In reality, scalability is often hindered by various potential “bottlenecks.” To optimize, we must consider the following direct impacting factors:

Network Latency

When a system expands across multiple servers or geographical regions, the data transmission time between components increases. If services must constantly communicate over the network without optimization, the total response time will lengthen, reducing the effectiveness of scaling.

Data Storage and Access

The database is often the weakest link in the scalability puzzle. When the number of read/write connections surges, data locks and access conflicts can overload the database, even if the application layer (App Server) has been scaled significantly.

System Architecture

A “Monolithic” architecture is typically very difficult to scale because its components are too tightly coupled. In contrast, a Microservices architecture allows for the independent scaling of specific parts of the application, optimizing scalability for the most critical features.

Resource Utilization

Inefficient management of resources such as cache, threads, and connections leads to waste. Suboptimal source code can consume all the resources of a new server as soon as it is added to the cluster.

Benefits of Scalability

Investing in Scalability is not just a technical task but a smart business strategy. When your system scales effectively, you reap invaluable benefits:

- High Stability: The system gains better self-healing capabilities and higher fault tolerance.

- Long-term Savings: Avoid the need to “tear down and rebuild” the entire system once the business reaches a certain scale.

- Competitive Advantage: The ability to deploy new features and serve a massive customer base faster than competitors.

- Flexibility: Easily integrate new technologies or migrate to the Cloud (Cloud Computing).

Factors to Improve Scalability

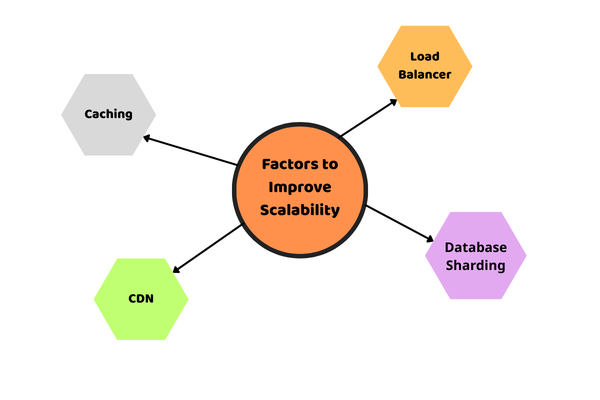

To transform a rigid system into a flexible one with high Scalability, experts often apply a specific technical “toolkit.” Below are the most important components to break through performance limits:

Caching

Caching is a technique for storing temporary data in high-speed memory (such as Redis or Memcached). Instead of performing expensive database queries every time, the system retrieves data directly from the cache, significantly reducing load and increasing response speeds by tenfold.

Load Balancer

A Load Balancer acts like a traffic cop, distributing incoming traffic to idle servers within a cluster. This ensures that no single server is overloaded while others sit idle, which is the key to successful Horizontal Scaling.

CDN (Content Delivery Network)

A CDN helps distribute static files (images, videos, CSS) from servers located closest to the user’s geographical location. Offloading static content allows the origin server to focus on processing complex business logic, thereby improving overall Scalability.

Database Sharding

Sharding is the process of breaking a large database into smaller parts (shards) and placing them on different servers. Each shard contains a portion of the customer data, reducing pressure on a single server and allowing the data tier to scale horizontally.

Challenges and Limitations of Scalability

While offering many benefits, the journey to mastering Scalability is not always smooth. The greatest challenge is complexity. As a system grows, management, monitoring, and security become increasingly difficult.

- Data Consistency: In distributed systems, ensuring that all servers have the latest data simultaneously (Data Consistency) is a complex problem (often referred to in the CAP theorem).

- Management Costs: You need a highly skilled engineering team to operate complex systems like Kubernetes, Microservices, or Distributed Databases.

- Relational Layer Errors: Sometimes, hardware expansion cannot solve the problem if the data table structures or SQL queries were poorly designed from the start.

Scalability is not a destination but a process of continuous improvement. A well-scalable system serves as a solid launchpad for business success in the digital age. Start with a flexible architecture, use the right tools like Caching and Load Balancers, and always stay ready for growth scenarios that exceed expectations.